2025 Supercomputing Ecological Development Forum, Longjing Technology proposes a new paradigm for AI landing

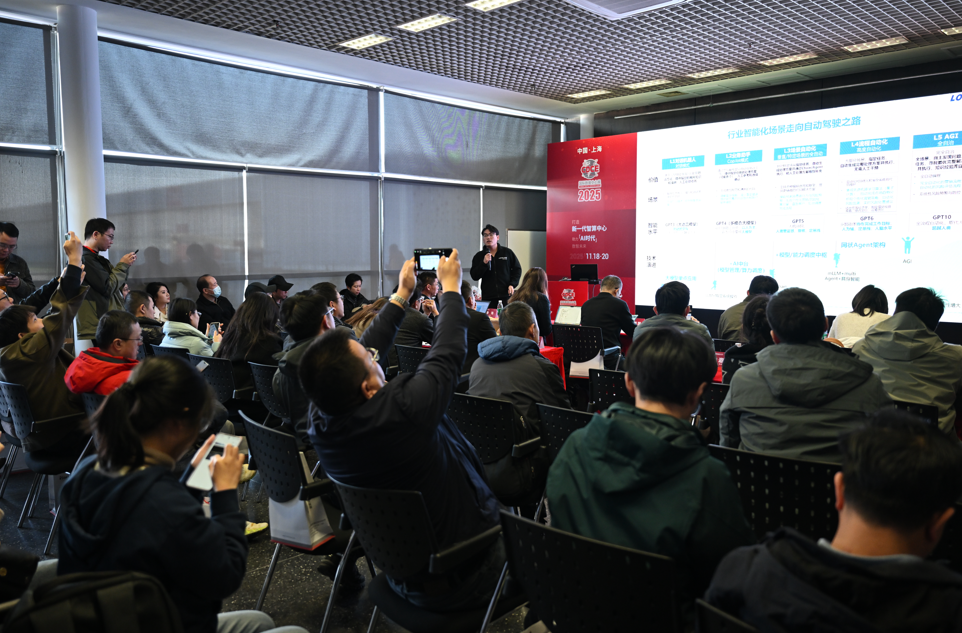

From November 18th to 20th, the CDCE International Data Center and Cloud Computing Exhibition will be held at the Shanghai New International Expo Center.

The excitement of the exhibition goes far beyond the booths, but is also reflected in the carefully planned lineup of concurrent forums.The 2025 Supercomputing Ecological Development Forum was held on November 19th, and Li Genguo, Director of Shanghai Supercomputing Center, shared a speech on computing power as the foundation of new quality productivity.

The forum, with the theme of "Building the Foundation with Computing Power and Empowering the Industry with Digital Intelligence", also invited Li Wei, AI Product Director of Longjing Technology, to participate.

In today's era of surging computing power and the emergence of large models, how can AI truly "land"?

Regarding this issue, at the 2025 Supercomputing Ecological Development Forum, Li Wei, the AI Product Director of Longjing Technology, ignited the atmosphere of the forum by stating that AI applications are the true carriers of commercial value in this technological transformation.

01

AI is no longer just technology, but a dual wheel drive of "productivity" and "experience"

At the speech, Li Wei divided the current AI applications into two major scenarios.

>>Productivity scenario:

AI provides efficiency value, assists people in completing work, and reduces costs and increases efficiency for enterprises. For example, automatic document generation, assisted coding, and intelligent report generation.

>>Pan entertainment scene:

AI provides experiential value and enhances the interaction experience between users and the virtual world. For example, natural conversations with game NPCs and emotional companionship chatbots.

The evolutionary direction of big models is AI Agent, a program that can plan, reflect, and use tools like humans.

The future of industry intelligence is walking on the path of autonomous driving.

02

From 'brute force heap memory' to 'fine optimization', inference efficiency achieves a leap forward

The high cost of computing power, frequent failures, and difficult maintenance are common pain points for many enterprises when deploying large models.

Li Wei pointed out that the trend is changing:

>>Replace large models with small models, no longer relying on "brute force heap memory";

>>Model framework and operator optimization significantly reduce video memory requirements;

>>Heterogeneous reasoning technology achieves significant reduction in computing resources.

Longjing Technology has done three things in inference optimization:

Multi concurrency support: From underlying architecture to resource scheduling, comprehensively improve throughput performance;

Inference latency reduction: By optimizing core operators, end-to-end latency is significantly reduced;

KV Cache Reuse: Supports greater concurrency and improves overall efficiency.

03

Self developed RAG system, breaking through the capability boundaries of large models

On site, Li Wei listed one by one the pain points of traditional RAG, including insufficient knowledge analysis, inaccurate segmentation, inadequate query understanding, and uncontrollable generation quality The solution of Longjing Technology is based on the pure blood domestic Ascend/Kunpeng AI infrared+self-developed RAG system,

realization:

>>Multimodal analysis (VLM+ASR+OCR)

>>Semantic segmentation small model, adaptive chunk length

>>User intent understanding, multiple issue recall

>>Vertical Embedding, precise handling of proprietary terms

>>Mind chain+dual judgment, reducing model illusion

This is not just a technological upgrade, but also a systematic guarantee for trustworthy generation.

04

The 'black technology' of storing and casting calculations, accelerating reasoning

In terms of the synergy between computing power and storage, Li Wei from Longjing Technology presented a remarkable set of data on site.

Comparing the environment of pure computing devices in a 30k+2K 10 concurrent scenario:

>>TTFT latency reduced by 95%

>>TPS throughput increased by 19 times

Li Wei stated that the higher the concurrency, the more significant the improvement in the throughput performance of inference computing power, which is precisely the value of the "casting computation with storage" inference acceleration scheme.

At the forum, Li Wei summarized the landing path of Longjing Technology into three stages:

>>Early stage: reduce costs and increase efficiency, create "standard products";

>>Mid term: behavior monitoring+content auditing, targeting healthcare, finance, military, government and enterprises;

>>Post production: Data governance → Data assets → Continuous subscription revenue.

Encapsulate computing power into "standard products" and complete the listing of Ascend all-in-one machines within 30 minutes.

Li Wei believes that "the more standards, the more scenarios, the more hardware, and the lower the cost - this is a positive flywheel

05

Linglong Smart Big Model Application Platform, allowing enterprises to understand and use big models effectively

Linglong Shuzhi has launched the "Linglong Shuzhi Big Model Application Platform" to address the pain points of many enterprises who are not familiar with, do not know how to use, and cannot afford to use large models.

Helping businesses:

Understand what a big model is and what it can do;

Quickly build intelligent applications that meet one's own needs;

Realize cost reduction and efficiency improvement, data assetization, and business intelligence upgrading.

The platform supports multiple mainstream models (such as LLaMA, Qwen, DeepSeek, etc.) and possesses core capabilities such as multimodal parsing, semantic segmentation, intent understanding, mixed recall, and thought chain reasoning, ensuring accurate, controllable, and traceable content generation.

Currently, multiple typical scenarios have been successfully implemented:

1. The "Content Review" system of a certain finance bureau achieves automatic generation, semantic understanding, and dynamic chart insertion of official documents;

2. A certain law firm's "contract review" platform identifies clauses through a large model, compares templates, and outputs audit results;

3. A water plant in Nanjing and a military unit in Shaanxi have achieved efficient data governance and intelligent decision-making through privatization deployment.

Li Wei's speech drew a round of applause from the audience, not only for the technical depth and commercial clarity demonstrated by Longjing Technology in the field of AI agents,

It is also the determination and action conveyed by the phrase 'If the mountain does not come towards me, I will go towards the mountain',

After the forum ended, many attendees still gathered around Li Wei, continuing to explore the potential applications of AI agents in their own industries.

In the era of computing power, models, and algorithms, many people are waiting, waiting for technology to mature, waiting for costs to decrease, and waiting for the next moment.

And the Longjing team chose a more difficult but solid path, actively heading towards the mountains of "industry real demand".

For Longjing, applause is not only recognition, but also a horn of progress. What we are doing is to turn computing power into a 'ready to use' standard, so that AI is no longer superior, but becomes the 'intelligent colleague' of every industry and every position. The flywheel of 'standard → scene → hardware → cost optimization' has begun to rotate, accelerating towards the goal of 2026.